1. DataDog Agent Setup

Prerequisites: This guide assumes you have a DataDog Agent already installed and configured with OpenTelemetry support.

If you need to install the DataDog Agent, please refer to the DataDog Agent Installation Guide.

2. Instrument your application

- SDK

- CLI

For direct integration into your Python applications:Refer to the OpenLIT Python SDK repository for more advanced configurations and use cases.

- Function Arguments

- Environment Variables

The DataDog Agent handles authentication and forwarding to DataDog. No API key needed in the application configuration.

3. Exploring Telemetry in DataDog

Once your AI application is sending telemetry data to DataDog, you can explore and analyze it using DataDog’s powerful observability features:Software Catalog

View all your AI services and their connections:- Go to APM → Software Catalog

- Select Map to see how services are connected

- Change Map layout to Cluster or Flow for different views

- Select Catalog view, then click a service for performance summary

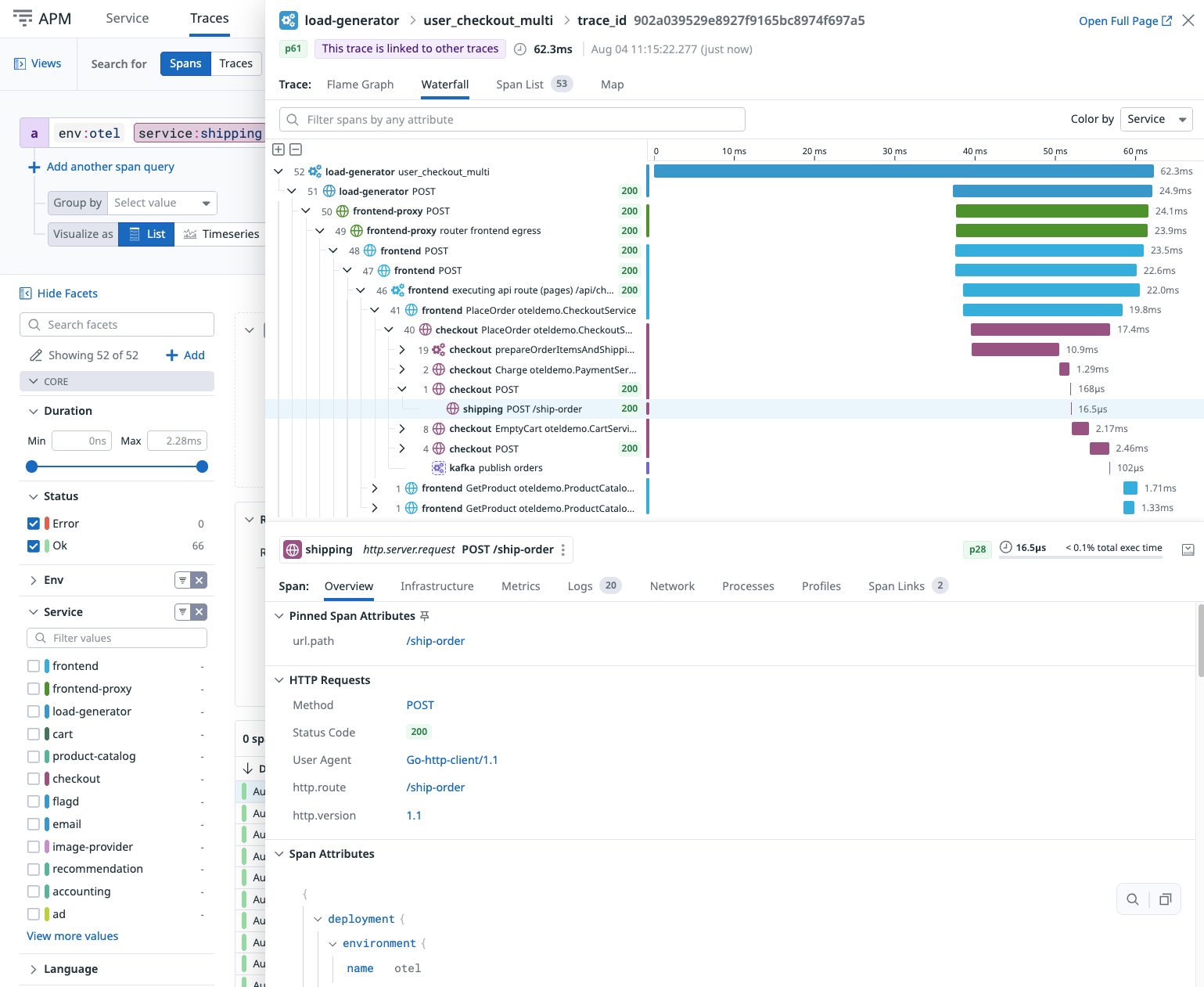

Trace Explorer

Explore traces from your AI applications:- Navigate to Performance → Setup Guidance → View Traces

- Select an indexed span to view full trace details

- View correlated data across tabs:

- Infrastructure metrics for services with Host Metrics

- Runtime metrics for implemented services

- Log entries correlated with traces

- Span links connected to traces

After your OpenLIT-instrumented AI application starts sending data, it may take a few minutes for traces and metrics to appear in DataDog. The built-in telemetry generation will begin automatically once your application processes AI requests.